blog

Chef Cookbooks for ClusterControl – Management and Monitoring for Your Database Clusters

If you are automating your infrastructure deployments with Chef, then read on. We are glad to announce the availability of a Chef cookbook for ClusterControl. This cookbook replaces previous cookbooks we released for ClusterControl and Galera Cluster. For those using Puppet, please have a look at our Puppet module for ClusterControl.

ClusterControl Cookbook on Chef Supermarket

The ClusterControl cookbook is available on Chef Supermarket, and getting the cookbook is as easy as:

$ knife cookbook site download clustercontrol

This cookbook supports the installation of ClusterControl on top of existing database clusters:

- Galera Cluster

- MySQL Galera Cluster by Codership

- Percona XtraDB Cluster by Percona

- MariaDB Galera Cluster by MariaDB

- MySQL Cluster (NDB)

- MySQL Replication

- Standalone MySQL/MariaDB server

- MongoDB or TokuMX Clusters

- Sharded Cluster

- Replica Set

Installing ClusterControl using Cookbook

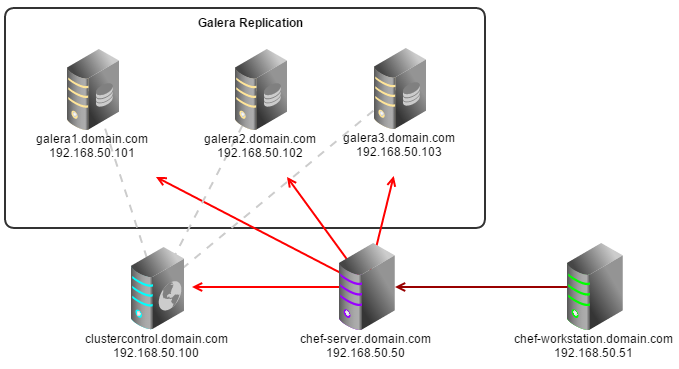

We will show you how to install ClusterControl on top of an existing database cluster using the cookbook. It requires the following criteria to be met:

- The node for ClusterControl must be a clean/dedicated host.

- ClusterControl node must run on 64-bit Linux platform, on the same OS distribution as the monitored DB nodes. Mixing Debian with Ubuntu or CentOS with Red Hat is acceptable.

- ClusterControl node must have an internet connection during the initial deployment. After the deployment, ClusterControl does not need internet access.

- Make sure your database cluster is up and running before doing this deployment.

** Please review the official cookbook page for more details.

Chef Workstation

This section requires you to use knife. Please ensure it has been configured correctly and is able to communicate with the Chef Server before you proceed with the following steps. The steps in this section should be performed on the Chef Workstation node.

1. Get the ClusterControl cookbook using knife:

$ cd ~/chef-repo/cookbooks

$ knife cookbook site download clustercontrol

$ tar -xzf clustercontrol-*

$ rm -Rf *.tar.gz2. Run s9s_helper.sh to auto generate SSH key files, ClusterControl API token, and data bag items:

$ cd ~/chef-repo/cookbooks/clustercontrol/files/default $ ./s9s_helper.sh ============================================== Helper script for ClusterControl Chef cookbook ============================================== ClusterControl requires an email address to be configured as super admin user. What is your email address? [[email protected]]: [email protected] What is the IP address for ClusterControl host?: 192.168.50.100 ClusterControl will create a MySQL user called 'cmon' for automation tasks. Enter the user cmon password [cmon] : cmonP4ss2014 What is your database cluster type? (galera|mysqlcluster|mysql_single|replication|mongodb) [galera]: What is your Galera provider? (codership|percona|mariadb) [percona]: codership ClusterControl requires an OS user for passwordless SSH. If user is not root, the user must be in sudoer list. What is the OS user? [root]: ubuntu Please enter the sudo password (if any). Just press enter if you are using sudo without password: What is your SSH port? [22]: List of your MySQL nodes (comma-separated list): 192.168.50.101,192.168.50.102,192.168.50.103 ClusterControl needs to have your database nodes' MySQL root password to perform installation and grant privileges. Enter the MySQL root password on the database nodes [password]: myR00tP4ssword We presume all database nodes are using the same MySQL root password. Database data path [/var/lib/mysql]: Generating config.json.. { "id" : "config", "cluster_type" : "galera", "vendor" : "codership", "email_address" : "[email protected]", "ssh_user" : "ubuntu", "cmon_password" : "cmonP4ss2014", "mysql_root_password" : "myR00tP4ssword", "mysql_server_addresses" : "192.168.50.101,192.168.50.102,192.168.50.103", "datadir" : "/var/lib/mysql", "clustercontrol_host" : "192.168.50.100", "clustercontrol_api_token" : "1913b540993842ed14f621bba22272b2d9471d57" } Data bag file generated at /home/ubuntu/chef-repo/cookbooks/clustercontrol/files/default/config.json To upload the data bag, you can use the following command: $ knife data bag create clustercontrol $ knife data bag from file clustercontrol /home/ubuntu/chef-repo/cookbooks/clustercontrol/files/default/config.json Re-upload the cookbook since it contains a newly generated SSH key: $ knife cookbook upload clustercontrol ** We highly recommend you to use encrypted data bag since it contains confidential information **

3. As per the instructions above, we will do from the Chef Workstation host:

$ knife data bag create clustercontrol Created data_bag[clustercontrol] $ knife data bag from file clustercontrol /home/ubuntu/chef-repo/cookbooks/clustercontrol/files/default/config.json Updated data_bag_item[clustercontrol::config] $ knife cookbook upload clustercontrol Uploading clustercontrol [0.1.0] Uploaded 1 cookbook.

4. Create two roles, cc_controller and cc_db_hosts as below:

$ cat cc_controller.rb name "cc_controller" description "ClusterControl Controller" run_list ["recipe[clustercontrol]"]

The ClusterControl DB host role:

$ cat cc_db_hosts.rb name "cc_db_hosts" description "Database hosts monitored by ClusterControl" run_list ["recipe[clustercontrol::db_hosts]"] override_attributes({ "mysql" => { "basedir" => "/usr/local/mysql" } })

** In above example, we set an override attribute because the MySQL server is installed under /usr/local/mysql. For more details on attributes, please refer to attributes/default.rb in the cookbook.

5. Add the defined roles into Chef Server:

$ knife role from file cc_controller.rb Updated Role cc_controller! $ knife role from file cc_db_hosts.rb Updated Role cc_db_hosts!

6. Assign the roles to the relevant nodes:

$ knife node run_list add clustercontrol.domain.com "role[cc_controller]" $ knife node run_list add galera1.domain.com "role[cc_db_hosts]" $ knife node run_list add galera2.domain.com "role[cc_db_hosts]" $ knife node run_list add galera3.domain.com "role[cc_db_hosts]"

Chef Client

Once done, let chef-client run on each Chef client node and apply the cookbook:

$ sudo chef-client

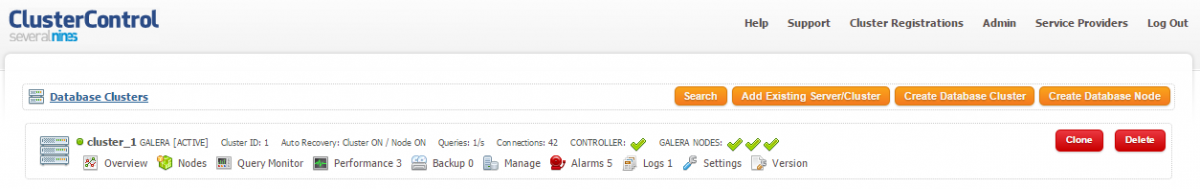

Once completed, open the ClusterControl UI page at http://[ClusterControl IP address]/clustercontrol and login using the specified email address with default password ‘admin’.

You should see something similar to below:

Take note that the recipe will install the RSA key at $HOME/.ssh/id_rsa.

Example Data Bag for Other Clusters

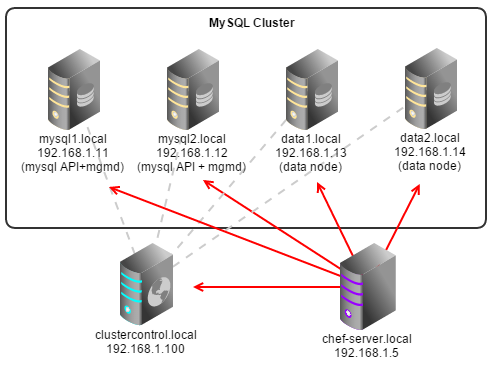

MySQL Cluster

For MySQL Cluster, extra options are needed to allow ClusterControl to manage your MGM and Data nodes. You may also need to add NDB data directory (e.g /mysql/data) into the datadir list so ClusterControl knows which partition is to be monitored. In the following example, /var/lib/mysql is mysql API datadir and /mysql/data is NDB datadir.

The following figure shows our MySQL Cluster architecture running on Debian 7 (Wheezy) 64bit:

The data bag would be:

{

"id" : "config",

"cluster_type" : "mysqlcluster",

"email_address" : "[email protected]",

"ssh_user" : "root",

"cmon_password" : "cmonP4ss2014",

"mysql_root_password" : "myR00tP4ssword",

"mysql_server_addresses" : "192.168.1.11,192.168.1.12",

"datanode_addresses" : "192.168.1.13,192.168.1.14",

"mgmnode_addresses" : "192.168.1.11,192.168.1.12",

"datadir" : "/var/lib/mysql,/mysql/data",

"clustercontrol_host" : "192.168.1.100",

"clustercontrol_api_token" : "1913b540993842ed14f621bba22272b2d9471d57"

}Standalone MySQL or MySQL Replication

MySQL Replication node definition will be similar to Galera cluster’s. In the following example, we have a three-node MySQL Replication running on RHEL 6.5 64bit on Amazon AWS. The SSH user is ec2-user with passwordless sudo:

The data bag would be:

{

"id" : "config",

"cluster_type" : "mysql_single",

"email_address" : "[email protected]",

"ssh_user" : "ec2-user",

"cmon_password" : "cmonP4ss2014",

"mysql_root_password" : "myR00tP4ssword",

"mysql_server_addresses" : "10.131.25.218,10.130.33.19,10.131.25.231",

"datadir" : "/mnt/data/mysql",

"clustercontrol_host" : "10.130.19.7",

"clustercontrol_api_token" : "1913b540993842ed14f621bba22272b2d9471d57"

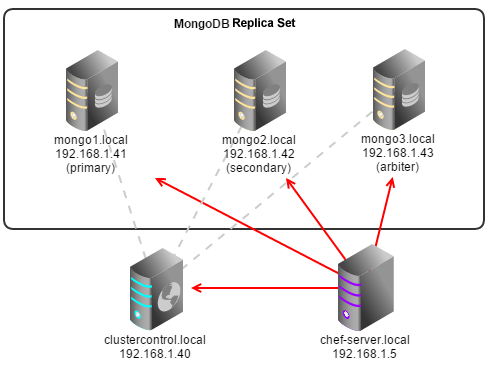

}MongoDB/TokuMX Replica Set

The MongoDB Replica Set runs on Ubuntu 12.04 LTS 64bit with sudo user ubuntu and password ‘mySuDOpassXXX’. There is also an arbiter node running on mongo3.local:

The generated data bag would be:

{

"id" : "config",

"cluster_type" : "mongodb",

"email_address" : "[email protected]",

"ssh_user" : "ubuntu",

"sudo_password" : "mySuDOpassXXX",

"cmon_password" : "cmonP4ss2014",

"mongodb_server_addresses" : "192.168.1.41:27017,192.168.1.42:27017",

"mongoarbiter_server_addresses" : "192.168.1.43:27017",

"datadir" : "/var/lib/mongodb",

"clustercontrol_host" : "192.168.1.40",

"clustercontrol_api_token" : "1913b540993842ed14f621bba22272b2d9471d57"

}MongoDB/TokuMX Sharded Cluster

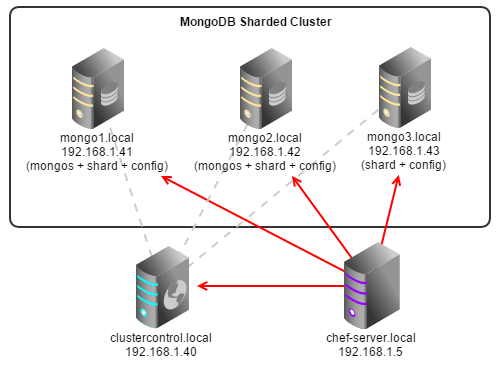

MongoDB Sharded Cluster needs to have mongocfg_server_addresses and mongos_server_addresses options specified. The mongodb_server_addresses value should be to the list of shard servers in the cluster. In the below example, we have a three-node MongoDB Sharded Cluster running on CentOS 5.6 64bit with 2 mongos nodes, 3 shard servers and 3 config servers:

The generated data bag would be:

{

"id" : "config",

"cluster_type" : "mongodb",

"email_address" : "[email protected]",

"ssh_user" : "root",

"cmon_password" : "cmonP4ss2014",

"mongodb_server_addresses" : "192.168.1.41:27018,192.168.1.42:27018,192.168.1.43:27018",

"mongocfg_server_addresses" : "192.168.1.41:27019,192.168.1.42:27019,192.168.1.43:27019",

"mongos_server_addresses" : "192.168.1.41:27017,192.168.1.42:27017",

"datadir" : "/var/lib/mongodb",

"clustercontrol_host" : "192.168.1.40",

"clustercontrol_api_token" : "1913b540993842ed14f621bba22272b2d9471d57"

}